Podcast: Play in new window | Download

Subscribe: RSS

Episode 3: Many Unhappy Retail Returns – Show Notes

Retail returns hurt the bottom line. They cause headaches for customer service, stocking, and profitability. It doesn’t help that some people have promoted using returns policies as a way of renting items not actually needed or wanted without being out of pocket. As much as that is a pain, it’s still just a best case scenario.

Retailers are at constant risk of being used as a front for organized retail crime through retail returns. They have to take precautions to prevent fraudsters from using them for money laundering and entangling them in legal nightmares.

Historically, this meant implementing retail returns policies that applied universally. Sure, you might get the occasional, kind customer service person who let you return an item after the 90-day window had passed – but that was the exception, rather than the rule.

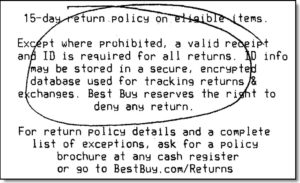

Now, in the age of data science and personalization, retailers are taking a different tack. They are tracking each customer’s purchase and return pattern to identify individual bad apples. Customers with problematic behavior are told that, once they reach a score calculated by their own data, they will be banned from making further retail returns. Among the companies implementing this new technique are Home Depot, Best Buy, and now even Amazon.

Listen to the full episode as we explore the ethics behind this change in retail returns policies and the algorithms driving them.

Additional Links on Retail Equation

How Your Returns Are Used Against You – Wall Street Journal article on Best Buy and others using retail returns to flag problematic behavior

Some Retailers Are Holding Your Returns Against You – NPR article covering the same topic as the Wall Street Journal

Retail Equation Website – The Retail Equation has a consumer side to its website that explains why it helps spot retail returns abuse fraud and also provides a link to request your Return Activity Report

Episode Transcript

View Episode TranscriptToday, we’re going to be talking about an article that recently came out on the Wall Street Journal about retailers who are using return information against their customers. So, Marie, give us a synopsis.

Marie: So basically, the Wall Street Journal article goes into the fact that several large retailers, including retailers like Home Depot and Best Buy, are working with a company called Retail Equation. And they’re using this company to basically help them solve a couple different problems. So the question that they’re asking from a data science perspective is, how do we decrease the number of fraudulent returns that are coming to our stores? And then they’re basically using data science to come up with different scores and different thresholds to say that, if somebody shows these different activities, or they show these different patterns, then we’re going to flag them in our system and let them know that if they proceed with the return, they may not be able to make retail returns at our store for a year, for example.

So in terms of the ethics behind that question, Lexy, what are some of the things that you think about when you hear this story?

Lexy: The first thing that I think about as a data scientist, is type 1 versus type 2 error. Type 1 and type 2 error are statistically when you think about what you flag as a false positive and false negative. Essentially, where do you want to err – on the side of caution or on the side of potentially missing something that could be problematic?

For retailers, it’s much more costly if they have a false negative – meaning that if they do not flag a fraudulent returner as being fraudulent or being problematic. It’s much more expensive for them to miss that than to accidentally annoy someone who is not fraudulent. That would be a false positive. False positives are the people who, in the article, it specified, went on twitter and said, “I don’t know why I’m being flagged for this behavior. I’m a good customer, I just had to return something and they’re telling me that now I’m a problem child.” As a data scientist, balancing type 1 and type 2 error are what come to mind initially.

From an ethical standpoint, I understand that there’s a need to identify fraud. And there’s certainly a very large argument to be made that returns end up contributing to things like money laundering or to organized crime rings and all those different things. So ethically, it’s something that should be approached. But it’s a matter of, what do you do to handle these types of errors? And how do you adjust? How do you look at those thresholds differently over time?

Marie: Would this also be a situation where you are trying to find that signal in the noise? Because they were saying that the National Retail Federation says that retail returns only account for 10% of exchanges and about 6.5% of those returns are fraudulent. So you’re already talking about returns already being a small portion of exchanges and then the fraudulent ones already being a very small portion of that set.

Lexy: You’re talking about sub 1%. This is very common. I mean, this happens a lot when you think about fraud in general and trying to identify fraudulent patterns. Realistically, what you’re looking for is patterns of behavior. It’s a pattern recognition problem.

In this case, the patterns depending on the equation that Retail Equation is using. Essentially how they’re setting up that problem. You could find that, for instance, there are too simplistic a set of rules. For example, let’s say you have a customer who has made four purchases and that, of those, three times they had to come in and return something. They may say, “Oh, they have a 75% return rate. Meaning every time they have an order, 75% of the time I can expect that they’re going to come in and return something. I think that’s a problem.” Now, it may or may not be truly a fraud problem. It’s certainly an interesting pattern of behavior. But if that were the only criteria, I’d be very concerned.

I doubt that Retail Equation has that simplistic a set of rules. Somebody has thought about it in terms of how you structure the features. We talked about feature engineering and understanding what features were going to go into the model. And so here, you’d really want to start looking at more patterns, more aspects to the pattern.

One of the interesting things about this particular article that they called out, actually, the Wall Street Journal called out, was that Retail Equation does not cross the retailers’ boundaries in terms of data. So they’re not necessarily using information from one customer at a specific retailer to how that customer is acting at another retailer. So if I, for example, I’m a customer of both Best Buy and Home Depot, they’re not going to use the information of my behavior at Best Buy to tell Home Depot whether or not I’m a risk.

Marie: Exactly.

Lexy: Which could be a very interesting thing to look at both for and against whether someone’s fraudulent. Because if that’s just the way that I shop and I’m not a problem child, I just happened to go in, try some stuff, see if it works in my house, and then go “eh, maybe not,” and make a return it’s not necessarily fraud. Like I said, it’s a kind of weird pattern of behavior to the retailer. They would assume that most of their customers are going to have the “purchase-use-done” kind of pattern, but it doesn’t necessarily represent fraud.

On the flip side, someone who does that at multiple retailers could actually be doing fraud depending on the scale and that’s really a bigger factor. One of the interesting things in there is that some of the scales of these seemed really small.

Marie: For sure. One example in this article was actually talking about somebody that had bought four different cell phone cases for their son, assuming that they would like one, only need one, could return the other three. When they tried to return the other three, Best Buy, using this system, basically got a score back on him and informed him that if he completed the return that his score within the system would prevent him from being able to do another return with Best Buy for a whole year.

It’s interesting that as part of the process that they were informing people so they would know that they wouldn’t be able to do returns in the future. They also are basically educating people on return behavior like these are the types of things that is good behavior, these types, things that are bad behavior. If you do the bad behavior, even if it’s not for a fraudulent reason, you’re still going to get technically a bad score with us in terms of being able to use our return services in the future.

Lexy: It’s an interesting one when you think about ethics in terms of changing behaviors. This is something that we see often with credit scoring. We’ll have an episode on another type of credit scoring system that it’s going to be coming up in China soon. The scoring process, understanding that you have a score – that you’re represented by a score for some behavior inherently makes you kind of think about your behaviors differently. So now, it’s, if I want to get someone a gift, do have to bring them with me? That way i don’t end up having to return something later because it didn’t fit or it wasn’t for the right phone or it wasn’t the color that they wanted.

Marie: Or making sure that you get it with the gift receipt so that way they can exchange it if needed?

Lexy: Then it’s on them. And so now you’re saying, “okay, I can only buy one. Because if I come back, they’re not going to let me do anything about it.” It takes away the safety net in the same kind of way that, as a consumer, if I look at my credit score and I see that my score is dropping, my safety net for gaining credit – gaining access to financial institutions’ funds – has decreased. Now I have to think about my behavior differently because I don’t have the ability to go reach out for more financing. So it’s a similar type of problem in that you think about – how are you are shaping customer behavior? Realistically, in this case, you’re likely shaping their behavior to go to someone else because there are options when you’re talking about a retailer.

If you’re Home Depot and you’re doing this and you say “well, if you return with us, then there’s a chance that we may not allow you to return again for the next year.” I may say, “well, you know what? There’s a Lowes across the street. Thanks very much.”

Marie: But at the same time, it also sounds like this is something that many different stores are looking at. It’s not just one retailer the other. So it could potentially be, like with credit scores, where it becomes a bigger platform.

I think consumers are already starting to ask questions. And, based on this article, it sounds like, for example, Best Buy already put together a 1-800 number so that if somebody did have an experience where they thought they were being tagged for fraudulent activity, but it wasn’t accurate, that they had a way to contact and dispute it. But they even said that when it comes to getting this type of report and looking at the score, because it’s being generated on the fly, it’s not recording that transactions that helped inform the algorithm when it made the actual score and decision. It’s very hard for them to be able to track back and understand why the score was basically put up the way that it was.

The quote from the article is “it isn’t easy for shoppers to learn their standing before receiving a warning. Retailers typically don’t publicize their relationship with Retail Equation. And even if a customer tracks down his or her return report, it doesn’t include purchase history or other information used to generate the score. The report also doesn’t disclose the actual score or the thresholds for getting barred.”

So I think it’s interesting that their information, in a way, is being protected because it’s not necessary being stored by the Retail Equation company. They’re using information in more of a real-time basis but not storing it. But they’re still keeping a score in terms of what that person is.

Lexy: Which is an interesting one. So there are two things that this makes me think of. One is access to information about yourself.

We’ve seen this in a number of other case studies, especially lately, where we have very large organizations like Facebook and Instagram and others allowing you to download – what do you have on me? What is all the data you’ve collected on me?

In this case, my guess is that Retail Equation has this data. It can’t just be on a real-time basis. It had to have trained a model over a set of data some point. The fact that it happens in this manner, meaning that they have some sort of real-time system to say, “hey, this is going to change the score. Now it’s going to be a problem,” is essentially a simulator, which is good for them to have. So, one is how do you get access to that? If I, as a customer, am being penalized for a behavior I want to understand what it is that i did, right? Again, changing that consumer behavior – changing that behavior in general. I want to be able to access the information that is causing you to think this about me.

The other thing that I’m thinking about what this is, this is a perfect opportunity to think about the retraining. Or kind of a human in the loop machine learning, where you use inputs even after a decision has been made to say this was their wasn’t correct.

Marie: Good point.

Lexy: You’d want to be able to feed that back when you go through the next iteration of model training. Some of these are realistically changing all the time. So when you think about machine learning and AI, typically you’re talking about a set of models that re-tune themselves with each instance of new data coming in. That could be every single transaction and you’d want to be able to say “this one we got wrong. This one needs to be changed.” So that the rules engine hopefully, behind the scenes, will learn that this is not truly fraud this is somebody whom was a good customer and now had a horrible experience and it was not accurate. And so being able to tune for those inaccuracies over time is really important.

So when you’re thinking about the ethics behind this, think about not only how you’re treating the data and what you’re looking for, but also how you deal with it on an ongoing basis. We talked a little bit about re-tuning a model, right? That care and feeding process of a model. This is a perfect example of why you need to go back and be able to rehash that model, tune it again, try and take into consideration some of these times where you’ve had someone proactively raise their hand and say, “hey, I’m not a problem. Don’t flag me.”

Marie: Yeah. So this would be an example of how to deal with the false positives that are coming out of this.

Lexy: Exactly.

Marie: That’s our quick take on the Wall Street Journal article talking about retailers and how they’re trying to prevent fraud and some of the data science ethics behind it.

Lexy: We’ll have a link to the article, as well as to a couple other resources that were related, down in the post below.

We’ll see you on our next quick take. Thanks so much.

I hope you’ve enjoyed listening to this episode of the Data Science Ethics Podcast. If you have please, like and subscribed via your favorite podcast app. Join in the conversation at datascienceethics.com, or on Facebook and Twitter at @DSEthics. See you next time.

This podcast is copyright Alexis Kassan. All rights reserved. Music for this podcast is by DJ Shahmoney. Find him on Soundcloud or YouTube as DJShahMoneyBeatz.

As someone who has multiple years’ experience handling retail returns (I know that’s nothing to brag about), this topic is near and dear to me. I believe there are two main hurdles to proper implementation of an anti-fraud system. The first is difficulty in accurate customer tracking. As simple as it sounds, some stores market themselves as not offering any reward membership or other system to tie a customer to a purchase. Combine that with the types of fraud-oriented customers that absolutely do NOT want to be tracked (paying in cash, refusing optional questions) and the issue becomes more complicated.… Read more »

Fraud is a way of gaming the system. Change the rules, and it will change the behaviors… but there will still be people trying to game the system. In the case of Retail Equation, one interesting note on customer tracking is that they require a state-issued ID. Not that it’s impossible to get a fake ID (just ask any under-age college kid), just that it’s a helpful deterrent – one more hurdle in the game. One of the possibilities that comes to mind in this is that there have been several data breaches in which drivers license information was exposed.… Read more »

Short answer: We can’t. My company (it was Publix, who cares) prides itself on customer service, so a calculus was clearly made to invest in customer satisfaction over the cost of scrutinizing returns. If a customer stomps up with an item, and no receipt, and we aren’t even sure we sell that item, they’re getting a refund. That might leave a lot of breathing room for a cottage industry of scurrilous people that return merchandise constantly, but it can’t be stopped in that respect. In terms of ethics I’d almost rather discuss the psychological effects of an employee that spends… Read more »

[…] talked a little bit about gaming the system in other episodes like the Retail Equation episode. The more information you get about what it takes to pass through the system and game the system, […]