Podcast: Play in new window | Download

Subscribe: RSS

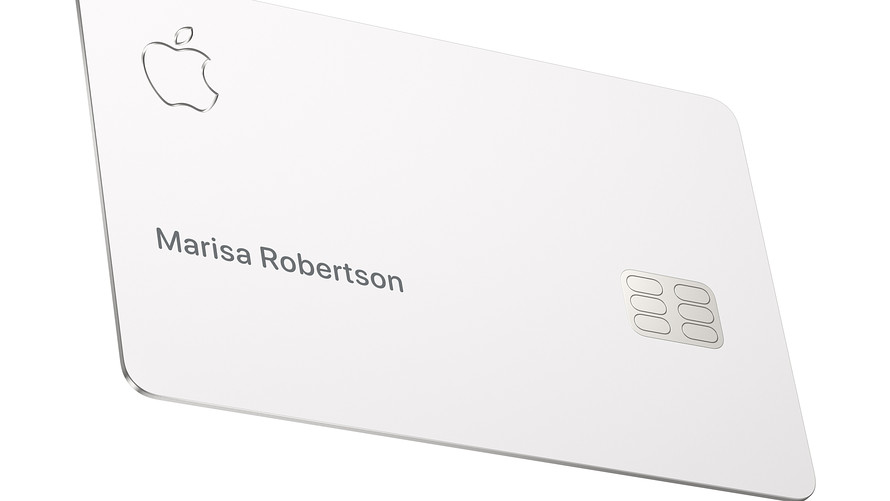

Show Notes on Apple Credit Card

Apple recently decided to offer a branded Apple credit card in conjunction with Goldman Sachs. This is the first foray into consumer credit cards for both companies and all did not go according to plan.

David Heinemeier Hansson, creator of Ruby on Rails, applied for the card and tweeted that he received a credit limit 20 times that of his wife. Steve Wozniack, co-founder of Apple, confirmed that he and his wife had each applied for the Apple credit card with exactly the same financial assets and he was granted a limit 10 times that of hers. Accusations of gender bias in the algorithm determining credit limits abound.

To complicate matters, New York state has passed legislation under which any algorithmic bias, intentional or unintentional, resulting in discriminatory treatment of any protected class is illegal.

How could this happen? How could an algorithm this flawed get into production use? Who is responsible for fixing it? And who might be going to jail?

Additional Links on Apple Credit Card and Unfair Limits

Viral Tweet About Apple Card Leads to Goldman Sachs Probe Bloomberg article describing the events kicking off the algorithmic bias investigation

Apple’s ‘Sexist’ Credit Card Investigated by US Regulator BBC Article including analyst commentary from Leo Kelion theorizing on potential causes

How Goldman Sachs Can Regain User Trust After Apple Card Discrimination Observer coverage including quotes from Cole McCollum as mentioned in the episode

Apple Credit Card and Unfair Limits Episode Transcript

View full episode transcriptWelcome to the Data Science Ethics Podcast. My name is Lexy and I’m your host. This podcast is free and independent thanks to member contributions. You can help by signing up to support us at datascienceethics.com. For just $5 per month, you’ll get access to the members only podcast, Data Science Ethics in Pop Culture. At the $10 per month level, you will also be able to attend live chats and debates with Marie and I. Plus you’ll be helping us to deliver more and better content. Now on with the show.

Marie: Hello everybody and welcome to the data science ethics podcast. This is Marie Weber.

Lexy: and Lexy Kassan.

Marie: and today we are talking about Apple credit card and some of the questions that have arisen after people started getting their credit lines. So Lexy, I’m interested to hear your thoughts since I know you’ve done some work in the financial space before. The summary is people were applying for their Apple credit cards. One of the people actually turns out to be Steve Wozniak, one of the co founders of Apple, and he found that even though he has the same financial history and account as his wife, that he was able to get a line that was 10 times higher than hers. This was reported by other people as well. Finding that males in relationship were getting higher levels than the the females in the relationship. That started bringing up questions of why is this happening and people asking about the data model that was developed by Goldman Sachs for Apple’s credit card. So what are some things that come to mind as you see these headlines in the news?

Lexy: There are a few that come to mind. The first is that this was Goldman Sachs’ first foray into credit cards. Their primary line of business for a very long time has been investments and so my first thought is did they not have the correct oversight for algorithm and regulation that they should. The second one is that in the articles that we were reading, the primary one being from BBC, which will link to Goldman Sachs specifically said that they’ve excluded factors from their model that would contribute to a bias against any protected class of citizen. However, as we have described on this podcast before, there are many possible ways that proxy variables could have snuck in that while they didn’t necessarily put gender into, for example, an application or that they weren’t bringing in variables that were specific to say sexuality or to race or other protected classes that it would still potentially sneak in depending on what variables they were bringing in and how they’d train their model.

Lexy: One of the things that I think about is – is it possible that even the name of the applicant could have been used as a potential biasing factor? It sounds maybe a bit strange, but in a followup article that we had seen from the observer, there was a quote from an analyst who I thought had a very insightful thought on it, which is these machine learning algorithms are really good at finding minuscule latent trends that humans wouldn’t even think about and they will exploit them. That’s exactly what they’re meant to do. They’re trying to find patterns that are indicative of behaviors and what if, for example, Steve and anyone named Steve.

Marie: Steve Wozniak.

Lexy: Well Steve Wozniak, but just Steve and you know in general traditionally a very male name, anybody named Steve had a higher credit line, so the model may have said, well if their name is Steve, we’ll give them a higher credit line versus, I don’t know what Steve was in the ex wife’s name is, I’m just going to say Anne… cause reasons. Let’s say it’s Anne. well, anyone named Anne has historically had a lower credit limit. We should set their credit limit lower. And you’ve just introduced gender bias into your model, not because you specifically pulled gender into your model, but because you have a proxy variable.

Marie: And this is something that we’ve even seen in other stories that we’ve covered. Like we have covered AI being used in different models for hiring candidates and depending on the data set that is used to train that model, you can get those types of biases pulled back in.

Marie: So I was just reading a story actually about Google working on algorithm for hiring and in theirs they submitted resumes from people that had done well in the company and it ended up biasing it towards people that were male because a lot of the resumes had male names like Bob on it. And so if somebody was male, the algorithm started selecting for them, not because of what the data sciences had intended to trade on, but because it had found those other associations.

Lexy: The one that I was thinking of was Facebook’s hiring algorithm, which they had removed the names from, but they left in the names of colleges or organizations and what they found was that the model picked up on things like women’s chess club or women’s college or girl’s soccer or something like that and because of the word women’s or girls or female, it specifically selected differently. There are a lot of ways that these variables can enter a machine learning model. You have to be very cognizant of what’s going in and what you have to do to truly remove that.

Lexy: One of the examples that I always think back to is really what got me interested in data science ethics to begin with, which was a financial application. We were basically required to submit extensive documentation of every attribute, every variable, every algorithm we were using to a regulatory group within the bank to ensure that we were not biasing, we were not able to potentially buy us our application based on the data that we were putting into the system.

Lexy: They were very stringent about this and even though the things that I thought about with regard to what we were doing, which was really marketing, it wasn’t making a true financial decision, but even there we were told you cannot have age or any proxy for age as factors within the model. So even though some of the things we were doing, we’re trying to determine who might be close to retirement, we couldn’t use age in that. As a data scientist contributing to marketing, it seemed a bit farfetched to think, well, I’m going to evenly distribute this to people who are 18 to 80 that seems odd to me, but there are cases like this where the financial decisions that are being made are much more impactful.

Lexy: The ability to have a line of credit, the ability to extend that credit, those are the times where regulatory really does need that level of depth in their scrutiny and it seems to me as though that was not applied at Goldman Sachs or that it was done as such an afterthought that it got to the co-founder of Apple. The other part that I feel like is kind of unfortunate for Apple, but they they dug themselves in is they said that this credit card was created by Apple, not a bank. Right? So now if it’s the Apple credit card and the Apple co-founder is saying, what’s going on, our credit card isn’t doing what it should. They’re kind of on the hook to fix it. So when we think about retaining responsibility, as we talked about in our last episode, there is a joint responsibility here between Goldman Sachs and Apple to resolve this problem.

Marie: The other actor that shows up in these articles that we’ll link to from the BBC and the observer, is that new York’s department of financial services has also specified that any discrimination, intentional or not violates New York law. So the fact that it is documented that discrimination happened, means that the algorithm was not ready for prime time and should not have been released.

Lexy: It’s illegal. It’s violated the law. Now what happens? The credit cards are out there. Are they going just blanket increase credit limits to women who have the credit card? Are they gonna decrease credit limits for men that have the credit card? How do they balance the risks now that they realize that their algorithm has flaws and what does that mean that it’s violated the law? Who’s held accountable to that? We’ve determined there are responsibility in multiple places. Certainly more than one person worked on this algorithm. Certainly more than one person looked at this algorithm. Are you really going to hold culpable legally? Everyone who touched it, where does that come down? Is it Apple? Is it Goldman Sachs? It’s going to end up having some sort of penalty from the state. What does that mean?

Marie: I feel like there’s an opportunity for New York to help in this context, redefine what would need to happen to truly test a model like this before it could go into production before it could go into prime time.

Lexy: Typically you do that. You do have a dataset that you run through your algorithm and see does it create the results that you would expect. What I have to wonder in that is did they mock up every field? If you were testing an algorithm for financial credit and you said, well, I just want to grab credit limits and like I want to grab financial information and see what comes back. If you, let’s say created your dataset and it didn’t have names, but your real life data set did, maybe just maybe you’d have missed it, maybe you’d have tested and thought this all looks good. I see that people with roughly the same credit score, roughly the same debt to income ratio, roughly the same repayment schedules. All these financial metrics seem to come out the same. That looks equitable. Sure, but you didn’t include the names.

Marie: So with that field masked, then the algorithm behaved differently.

Lexy: Correct. Sure. You didn’t get the variation that you would have seen. It sounds so simple, but it’s that kind of simple stuff that gets missed every single day. It’s like the little things that you think that has no bearing, it doesn’t matter. But if it were something that basic, it is entirely feasible. It’s that type of data manipulation that happens every day and it’s something so simple that just it messes you up every time.

Marie: And in the BBC article there’s actually kind of an an analyst, Leo Kelion who discusses, part of the problem is that the Goldman Sachs model is a black box, and so being able to define exactly what happened can be difficult.

Lexy: I don’t know that there’s anything specifically different about Sachs’ algorithm for their credit limit determination versus any other banks. You’re not going to go to Chase and say, I would like to know exactly why my limit was set at X. They couldn’t explain that to you. It’s not Goldman Sachs alone. It can’t explain how that specific number was applied.

Marie: This is something that we’ve talked about in other episodes where a lot of the machine learning algorithms, because they’re basically training themselves, do become a black box where it’s not specifically known how they’re getting from the inputs to the outputs. Sure. Real time.

Lexy: Usually it’s not necessary to try to reverse engineer all of that. It becomes very necessary when you run into problems like this and you realize that there’s bias. There are algorithms for finding bias and algorithms that you could apply that clearly weren’t applied. There are explainability packages that you can apply against a machine learning application to try to explain to you the issue that you have with things that are very complex like these large neural nets that are used very commonly for deep learning and so forth. Is that for a human to try to parse apart everything that’s gone on in that black box, it would take an inordinate amount of time and brain power and we simply don’t have that type of capacity. You’d want to understand the largest cases, the ones that apply to most of the data and then you try and dig into maybe some of the those smaller results.

Lexy: Generally speaking, you can only handle trying to reason out so many different cases. Machine learning is there for scale. The whole point is that it can scale larger than a human mind can scale. Generally it’s going to be an awful tough call to say, well, we need to be able to interpret every little nuance of this model. We’ve specifically designed systems to do more than we could, so we either have to scale back the system or we need to come up with a system to be an adversary to the system we created, which is what typically happens. You have an adversarial neural net or an adversarial algorithm that you would pit against it. So in this case, think of it as your credit decision algorithm pitted against a regulatory algorithm. The regulatory algorithm might be the one that checks for bias might be the ones that checks for proxy variables or skewness and the data that shouldn’t be there and it would constantly have this battle. Every decision, every time it would be re-evaluating and saying, does this still look like what regulatory would need to check for yes or no if no, it’s going to retrain model on the decision side versus we’re having to retrain the regulatory side.

Marie: You see the adversarial regulatory models being something that would in this case still be developed at Goldman Sachs or is that something where the New York regulatory system steps in and says, this is the regulatory model that you have to develop against?

Lexy: I think that there are opportunities for multiple levels of this. Certainly from New York, they have regulations, regulatory could program in specific types of tests essentially to prove that it meets regulatory compliance. That’s just one state though. You’ve got all the other States in which they’re offering the card. There’s federal level regulations, there are all these different layers of compliance that you would need to code for. And so I think that there’s a market out there for a regulatory compliance adversarial model that could be programmed with all of the code that needs to be in there to check. That said, it’s very possible if all you’re doing is checking for that bare minimum, you’ve hit that minimum threshold. This is kind of what Sheila was talking about in our interview with her. The legal threshold is the minimum, the ethical threshold is somewhat higher.

Marie: Agreed

Lexy: For a bank, they might hit that regulatory legal minimum threshold. But to truly be ethical with their decisions, they may want to apply additional factors or check for additional things to make sure that they go to their own standards beyond just what the regulation tells them. So I think there’s an opportunity there. The other thing is that I always worry that we get into this cat and mouse. We’ve talked about this with anticipating adversaries. When you start to anticipate the tests you have to meet or beat, you start gaming the system. So if your algorithm for your decisions knows what it has to test against, it can maybe find ways around it that’ll meet what the regulation says, but maybe doesn’t meet the spirit of the law or regulation.

Marie: That also comes back to how you test and train and feed the model because you’d want to make sure that this kind of goes back through the data science process. How did you train the algorithm and what data did you use to train it and then once you have the model set up and it’s ready for production, how do you continue to monitor it and make sure that it’s doing what you want it to and if it’s not doing what you want it to, how do you retrain it and adjust it and make sure that it’s working the way that you had envisioned it working?

Lexy: Absolutely. It’s part of retaining responsibility for the outcomes and making sure that over time you’re adjusting and keeping the responsibility of the adjustments and the outcomes and so forth. Not that you’re individually accountable to it, but that as an organization, as a group, as a team, you are responsible for ensuring that the outcomes are what you intended.

Marie: So, I think the point that you just made Lexy really kind of tie in with the quote that the observer article ends with one of the analysts that they quote in this article from Lux research. His name is Cole McCollom. He says at the end of this article, this incident should serve as a warning for companies to invest more in algorithm interpretability and testing in addition to executive education around the subtle ways that bias can creep into AI and machine learning projects. Definitely a lesson for people to take away and to apply to their own projects. And even as us being consumers take into consideration as we’re looking at at these new offerings that are coming onto the market. Thanks everybody for listening to this episode of the data science ethics podcast. This was Marie Weber and Lexy Kassan. Thanks so much. See you next time.

We hope you’ve enjoyed listening to this episode of the Data Science Ethics podcast. If you have, please like and subscribe via your favorite podcast App. Also, please consider supporting us for just $5 per month. You can help us deliver more and better content.

Join in the conversation at datascienceethics.com, or on Facebook and Twitter at @DSEthics where we’re discussing model behavior. See you next time.

This podcast is copyright Alexis Kassan. All rights reserved. Music for this podcast is by DJ Shahmoney. Find him on Soundcloud or YouTube as DJShahMoneyBeatz.