Podcast: Play in new window | Download

Subscribe: RSS

Episode 6: Google Has a Gorilla Problem – Show Notes

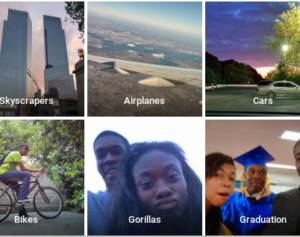

Google is a forerunner in many areas of data science and artificial intelligence. Yet one problem seems insurmountable for them – properly distinguishing between dark-skinned humans and gorillas.

In 2015, an African-American software developer, Jacky Alciné, looking through their automatically-tagged images in Google Photos saw a tag of “gorillas” on a picture of himself and a friend. He brought this to Google’s attention on twitter.

This sparked a long discussion began between him and the Chief Architect for Social at Google, Yonatan Zunger. The immediate fix, implemented by Zunger and team, was to remove the tag “gorillas” from all photos – even those of actual gorillas, just in case. It was meant to be a temporary patch. Yet, three years later, the more robust solution hasn’t been implemented. Gorillas are still not tagged in Google Photos.

In today’s episode we explore this case study as a quick take and posit ideas on how this happened.

Additional Links on the Google Gorilla Problem

Google Photos Tags Two Black People as Gorillas Article from The Verge in 2015 when this initially came to light. Google immediately issued an apology.

Google Removed Gorillas from Tags and Stills Hasn’t Fixed It Article from The Verge in 2018 revisiting the Google Photos tagging and bringing to light that this is still not resolved.

When It Comes to Gorillas, Google Remains Blind Wired article with examples of photos showing no tags even when of Gorillas.

Episode Transcript

View Episode TranscriptMarie: Welcome to the Data Science Ethics Podcast. I’m Marie Weber and I’m here with Lexy Kassan.

Lexy: Today’s topic is the odd and interesting case of Google and its gorilla problem. This one started a few years ago when a programmer from Brooklyn, I believe it was, notified Google that an image they had posted to their Google Photos had been erroneously tagged as gorillas when it was actually this person and a friend of theirs. This particular individual is African American and was offended by the fact that Google had tagged this image as being of gorillas rather than of people or friends or something like that.

Marie: And specifically it was an automatic tag in the Google Photos feature.

Lexy: Correct. So when you upload something to Google Photos, or if you take a picture on an Android phone, it automatically goes up to Google Photos. Google tries to identify and tag what’s in that image and then classify it. It’ll create galleries for you. It’ll try to group images with the same subject matter into something. Or it’ll do that by trip. So it does it by location if you have your geo-location on and so forth. In this case, it was tagging the image as of being of gorillas rather than of people.

Marie: And it also put those images into a gorilla gallery as well.

Lexy: Yes. When Google was notified that this had occurred, Google’s Chief Social Architect, a man by the name of Yonatan Zunger, responded to this individual and told them that they were going to rectify the situation. They were appalled that their algorithm had done this. And the way that they approached rectifying this situation was to immediately remove the ability for Google Photos to tag anything as a gorilla, including gorillas.

Marie: And then subsequently also removed the ability to tag images as apes or as chimpanzees or as other primates.

So in terms of talking about the bias behind this, what does this relate to in your mind, Lexy?

Lexy: The reason behind it is likely a selection bias problem. That’s where if they didn’t have a sufficient number of photos of individuals with very dark skin, they could have accidentally trained the algorithm to identify dark skin as gorillas, in this case. It’s a super unfortunate thing. It’s awful. You should have a better, more varied data set than this. But that’s most likely what had happened.

The fact that going from having too few samples to not tagging anything as gorillas at all happened is an interesting choice. And it really at that point is no longer concerned with bias. It’s concerning the ethics of algorithm training and ongoing support.

Marie: In terms of some of the research that we’ve done, the information that we’ve seen is that Yonatan Zunger had a couple of quotes. In some of these quotes basically expressed that the Google team and he were disappointed that this had happened and that’s why they wanted to address it. But they also pointed out that it can be hard to train an AI. That there can be things that make it difficult like obscured faces and different skin tones and lighting. They definitely are pointing out the fact that it can be difficult, what they’re trying to do. But, at the same time, you would feel like with a company as large as Google, especially with everything that they have in the Google search algorithm, that they would be able to get the data set needed to properly train an AI for this type of application so it can actually recognize things correctly.

In terms of their response, I think there are a couple of things to call out in terms of good ethical procedures. Google did respond. Google did look into the information and what was happening. They did a follow up to say “this is what we’re doing to rectify it.” They made a commitment to investigate and to fix it. So those were all positives.

Lexy: They were and it’s a tremendous credit to Google and to Yonatan Zunger that they actually did respond to this person. That they did do something proactively to remediate the problem.

That said, they also said that they were working on a longer-term solution. Just a few months ago, there were a number of articles that came out indicating that this still had not been fixed. Essentially, even now, if you were to go to the zoo and take a picture of an actual gorilla, Google Photos would not tag that as a gorilla. They’ve still got this filter in place that does not allow for the tagging of images to be specified as a primate just in case. An err on the side of caution to make sure that they’re not offending somebody by accidentally tagging a picture of a person as a gorilla in the future. But it still seems not quite right. i don’t know how to phrase it.

Google has so many images that are properly tagged, that have metadata around them. Marie, I know you can speak to this when it comes to how images are classified and tagged for search engines to be able to pick them up and identify what in them. That data set – all the photos out there that Google has ever cached – is available to them to train and AI on. For them to say “Oh it’s difficult if there’s an obscured face. It’s difficult if there’s a range of skin tone.” I feel like it’s a cop out. And I know how hard it is to train these. I still feel like it’s a cop out, with that much data to be used.

Marie: And also is much time as has passed in this case. We’re talking about something that originally was reported to them in 2015. We’re now looking at 2-3 years later and it’s still an issue. So I think that’s part of the reason why people are bringing this back up as part of the discussion and saying “this is still an issue because it hasn’t been fixed.” So on the one end, the initial response was very positive from an ethical standpoint in saying “hey we’re looking into this. We don’t want this to happen. We’re sensitive to how this could make people feel.” But then to not have a better solution in place after this much time has passed, I think that’s where the other side of the kind of the ethical feeling comes in – where it’s like there should be something more – more progress on this front than there has been.

Lexy: What’s really fascinating about it from that perspective is that one of the leading languages that’s used to do image classification and image identification came from Google. It’s a newer language called TensorFlow and Google developed it specifically for this type of purpose. It is now one of the go-to standards in data science for image processing and image recognition. They came up with it! It should be powerful enough to handle this situation.

I think what is likely happening is – honestly I think that Google has the technology. I mean, of anyone, Google has the technology. I think it’s a conscious choice that Google does not want to take the risk of accidentally offending someone in this particular manner again.

That’s an interesting choice, if that’s the choice that they’re making, because there are so many different types of things that can be offensive. How many of them are then not going to be tagged? At what point are we obscuring the ability to get to information by disallowing it on the chance that it’s offensive? I don’t know that i have an answer to it. It’s just a very interesting question to say “how far is too far when we go to try to not offend people.”

Marie: And I think some of the other articles that we found around this particular instance talked about some other examples and the fact that, as humans, as were growing up, we understand and we learned different nuances. One of the other examples that was referred to was images of Native Americans in their Native American dress and it being tagged as a costume. It’s not actually costume, that’s their actual ceremonial dress. If they’re limiting tags like that, it’s like you said, they don’t want to offend people. They want to be sensitive to people’s different cultures and heritages. But if that’s getting tagged as the costume and they say “okay we’re not going to tag things as costumes anymore,” we’re not getting the information from somebody’s dinosaur costume as a kid being able to detect it.

As humans, we learned those nuances and we have years of experience growing up, being able to learn those things, to be able to look at something and say “OK, this makes sense in this context or this context.” We’re trying to get AI up to speed to be able to do similar things and there are challenges. But, at the same time, we want the data to be there. So when is it better to have the data be there or when is it better to not have the data be there? I guess that’s kind of the question.

Lexy: They’re disallowing specific tags. It’s not that they’re saying “we’re not going to use the algorithm at all or we’re going to remove the data.” They’re not taking away the photo – they’re simply not allowing it to tag it in this particular way. It’s an interesting conundrum of how much or how little is the right amount of data? And how well do we, as humans, convey our sense of context to an AI? Which is a much larger topic.

Marie: It’ll be interesting to see how Google addresses it now that this has kind of been re-brought up. If they use any of the other technologies that they developed for other areas to maybe come back and address this area in a more complete or substantial way. Or if they continue to play a very conservative approach and just continue to basically have the tags be unavailable.

Lexy: I have every intention of going to the local zoo and taking pictures of gorillas and seeing what Google does with them, at some point in the near future, to see what happens.

Marie: Especially since you have a Google phone.

Lexy: It’s true. So google, if you’re listening, figure it out.

Marie: Well, I think that’s a good spot to wrap up.

That was a quick take discussing the algorithm that Google had that was inappropriately tagging some photos as gorillas. If you want more information on this topic, you can look at the resource links below. This has been Marie and Lexy with the Data Science Ethics Podcast.

Lexy: Thanks so much.

I hope you’ve enjoyed listening to this episode of the Data Science Ethics Podcast. If you have please, like and subscribed via your favorite podcast app. Join in the conversation at datascienceethics.com, or on Facebook and Twitter at @DSEthics where we’re discussing model behavior. See you next time.

This podcast is copyright Alexis Kassan. All rights reserved. Music for this podcast is by DJ Shahmoney. Find him on Soundcloud or YouTube as DJShahMoneyBeatz.

[…] AI is the future and there’s lots of money to be made from it. But organisations keep making the news over AI governance failings, such as Microsoft’s chatbot that turned racist and google images labelling African-Americans as gorillas. […]